Combating DNS Amplification Attacks: Strategies for Resilient Infrastructure

We’ve already explored how unmanaged IoT devices silently expand the attack surface and how hidden risks in the DNS layer can undermine visibility and control. Building on that foundation, today we turn to another long-standing threat that grows directly out of those same weaknesses: DNS amplification attacks. Unlike stealthy blind spots or quietly misconfigured devices, amplification is loud, brute-force, and designed to break availability outright. It has powered some of the largest denial-of-service incidents in history, and understanding how it works is essential to building resilient DNS infrastructure.

That hidden importance is precisely why DNS has long been a favored target for attackers. Among the arsenal of distributed denial-of-service (DDoS) techniques, one stands out for its sheer efficiency: the DNS amplification attack. It is elegant in its simplicity, devastating in its effect, and despite being nearly two decades old, still one of the most dangerous threats to service availability today.

The idea is deceptively simple: take advantage of DNS servers’ willingness to answer queries, and trick them into directing their responses not back to the sender, but to an unsuspecting victim. By forging source IP addresses and asking the kinds of questions that elicit oversized responses, attackers can transform relatively modest traffic into a flood of data orders of magnitude larger than what they put in. It is a textbook case of asymmetric warfare in networking: small effort, enormous impact.

The consequences have been dramatic. From early attacks that briefly disrupted internet exchanges to massive campaigns that brought down global services, amplification has consistently proven its worth as a weapon for cybercriminals, hacktivists, and even nation-state actors. The sheer scale possible with this technique is staggering; with a few hundred misconfigured servers, attackers can unleash floods measured not just in gigabits, but in terabits per second, enough to strain even the world’s largest networks.

And here lies the modern challenge. As organizations race toward cloud adoption, IoT proliferation, and global-scale digital services, their dependency on DNS has only deepened. Yet the core weaknesses that make amplification possible have not disappeared. To understand why this threat persists, and how defenders can respond, we need to look closely at how DNS amplification works, trace its history in shaping the DDoS landscape, and explore the strategies that can keep infrastructures resilient when the flood inevitably comes.

How DNS Amplification Works

To appreciate why DNS amplification attacks are so effective, it helps to think about the design choices baked into DNS in the early days of the internet. Back then, resilience and openness were the goals; security was rarely front of mind. DNS runs over UDP by default – a lightweight, connectionless protocol that trades security checks for speed. In the 1980s, this seemed like an elegant solution. In today’s threat environment, it’s a liability.

Here’s the crux of the problem: UDP does not verify the source of a packet. If I send a DNS query to a resolver and claim that it came from your IP address, the resolver has no reason to doubt me. It processes the request and dutifully sends the response to you, not me. That’s reflection: directing someone else’s traffic to an unwitting target.

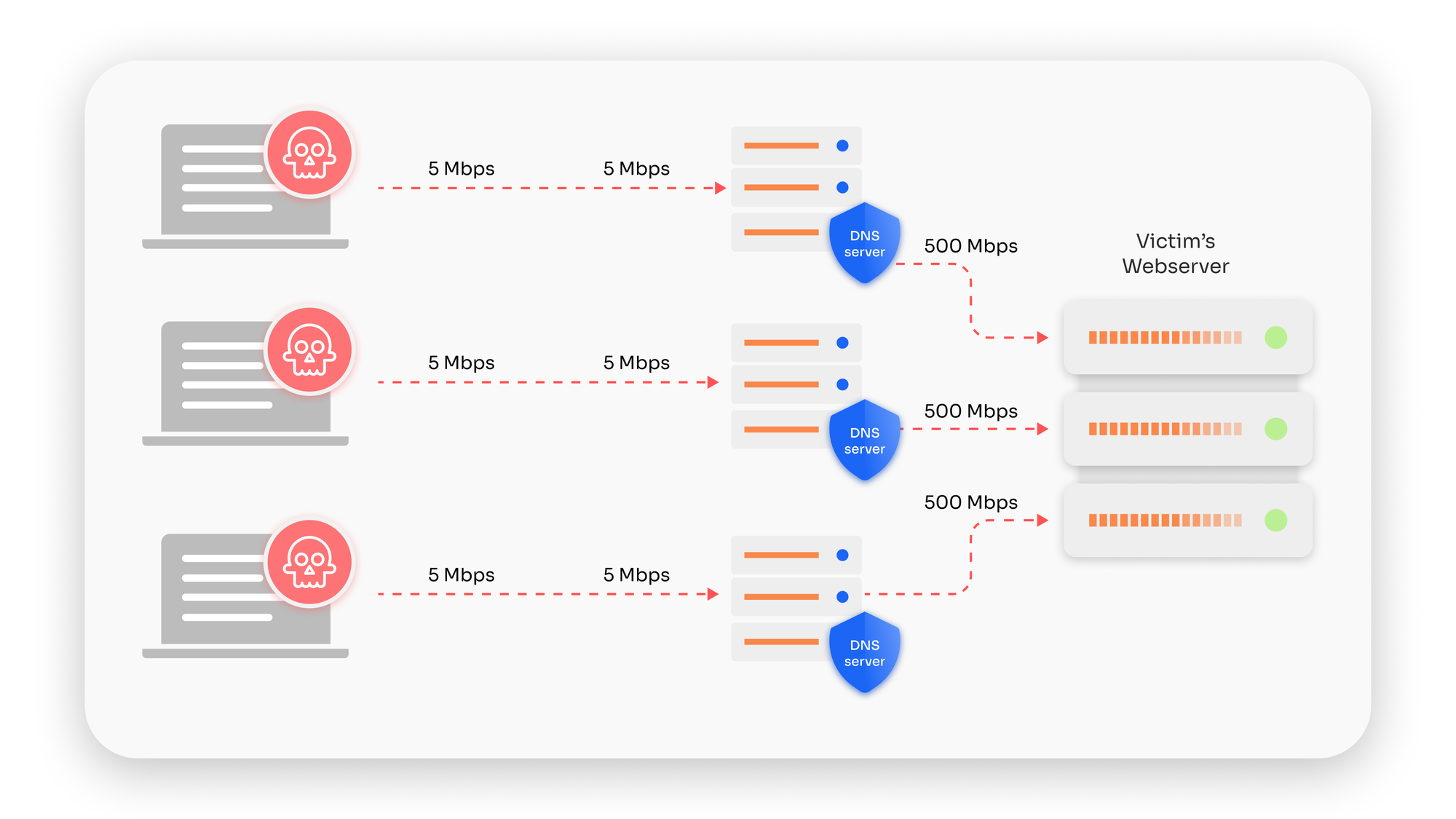

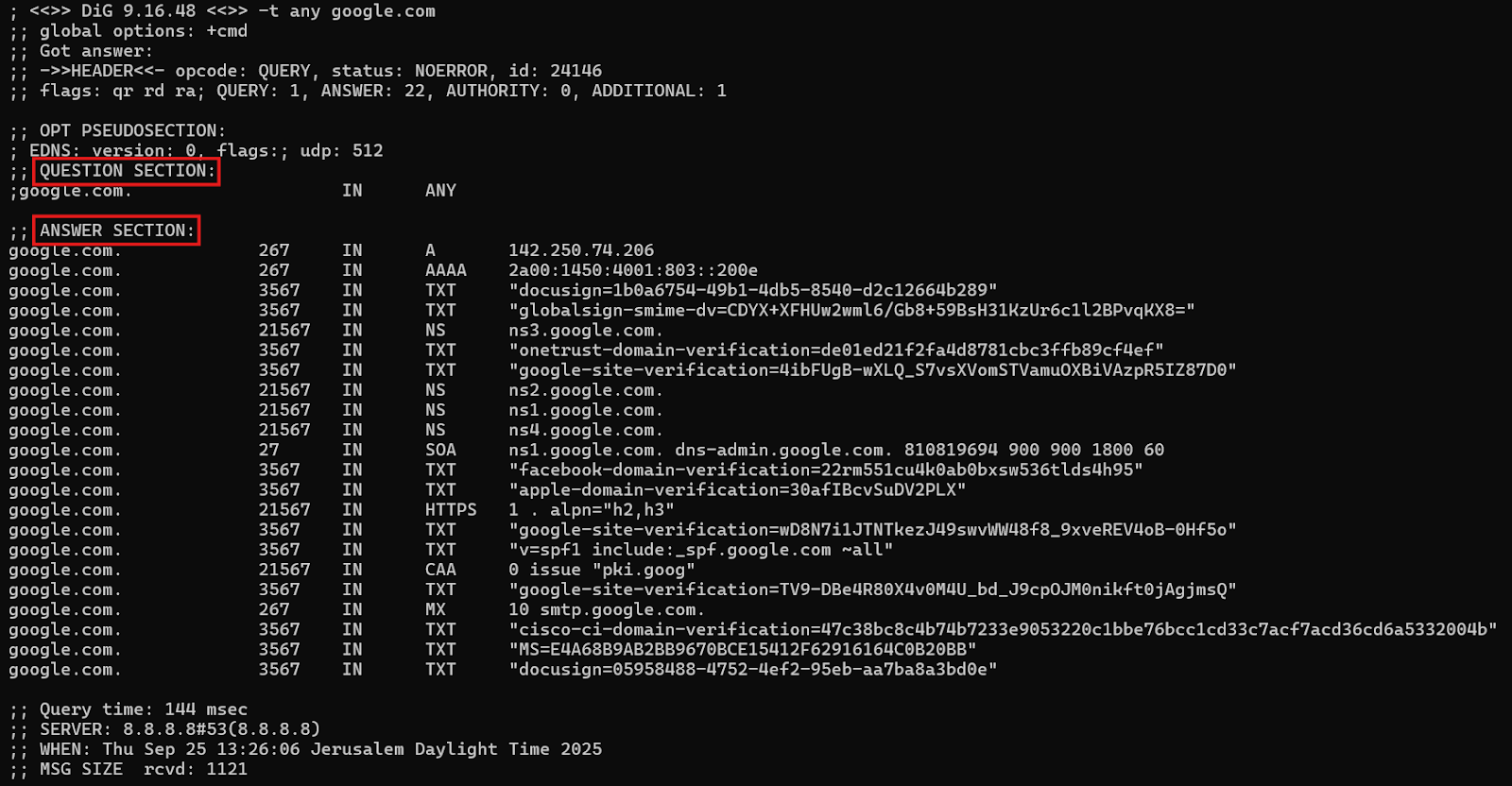

Amplification comes from the mismatch between the size of DNS queries and responses. A query might be just a few dozen bytes. But depending on the question, the answer could be dozens or even hundreds of times larger. Attackers exploit this asymmetry by asking questions designed to produce bloated responses, traditionally with ANY queries, but in recent years also by leveraging DNSSEC-enabled domains whose signed responses can be enormous. Multiply this effect across thousands of vulnerable resolvers, and you have a firehose of data aimed at the victim.

What makes amplification particularly insidious is the scale-to-effort ratio. A modest botnet can generate millions of spoofed requests per second with very little bandwidth cost to the attacker. Yet the resulting responses saturate the victim’s network links, overwhelm their DNS resolvers, and often spill over to choke upstream providers. It’s the equivalent of sending postcards to every address in a city and making sure all the replies are forwarded to one unlucky mailbox, it doesn’t take much to bury the recipient under an avalanche of letters.

Over the years, researchers have cataloged amplification factors for different DNS configurations, with some exceeding 50x. In other words, one megabit of attacker traffic can translate into 50 megabits raining down on the target. When attacks scale into the terabit range, as they have in recent years, the raw math makes clear why amplification has remained a mainstay in DDoS toolkits.

A Lookup on History of DNS Amplification in Action

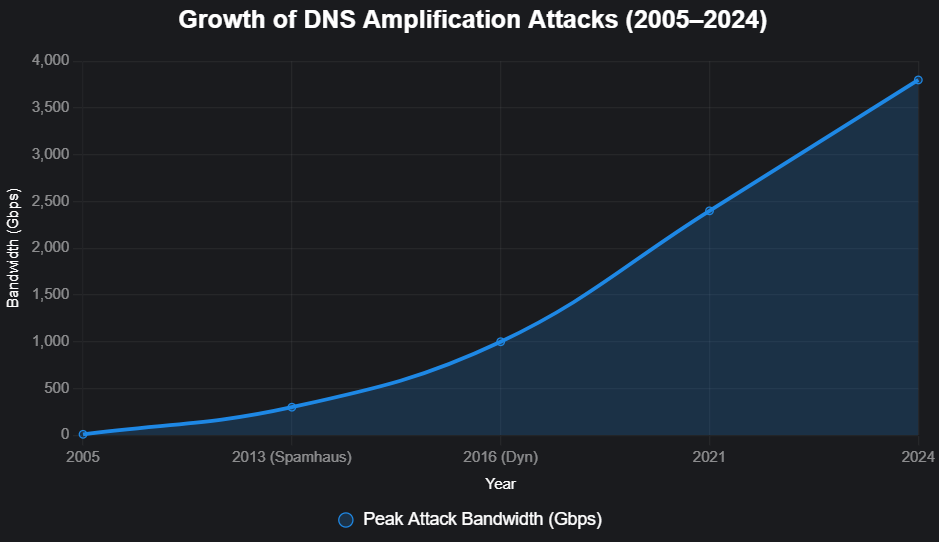

The first known uses of DNS amplification date back to the mid-2000s, when attackers realized that the combination of UDP reflection and oversized responses was a ready-made recipe for denial-of-service. At the time, attacks were relatively modest by today’s standards, enough to knock small websites offline or overwhelm university networks, but rarely of global consequence. That perception changed dramatically in 2013.

In March of that year, the nonprofit spam-fighting organization Spamhaus became the target of what was, at the time, the largest DDoS attack ever recorded. Attackers mobilized tens of thousands of misconfigured DNS resolvers and launched a flood of amplified traffic that peaked around 300 Gbps. For context, most backbone providers of that era provisioned only a few hundred gigabits of capacity for entire regions. The attack didn’t just affect Spamhaus, it strained internet exchanges and transit providers across Europe. Suddenly, amplification wasn’t just a niche problem, it was headline news.

The lesson was clear: by exploiting the openness of DNS, attackers could scale their power far beyond their own infrastructure. And the technique was no longer confined to isolated campaigns. In the years that followed, amplification became a staple in the DDoS playbook, often combined with other vectors to increase the pressure on defenders.

Then came 2016 and the Dyn incident. While the Mirai botnet is usually remembered for its role in weaponizing insecure IoT devices, amplification once again played a part. Dyn, a major DNS provider, was hammered by a wave of malicious traffic that disrupted access to Twitter, Netflix, Reddit, and dozens of other household names. It wasn’t just one company under siege, entire slices of the internet became unreachable. The attack demonstrated how critical DNS had become to digital life and how fragile the system could be under coordinated assault.

Since then, the numbers have only grown. In the early 2020s, several large-scale amplification campaigns surpassed the 1 Tbps mark, pushing the limits of global mitigation capacity. Some reports have documented peaks nearing 2.5 Tbps, dwarfing the once-unimaginable Spamhaus flood.

One striking example came in 2021, when a European cloud provider reported being hit with a 2.4 Tbps DNS amplification campaign that lasted several minutes. This was not a nuisance attack, it briefly disrupted connectivity across multiple data centers and caused collateral strain for upstream carriers. What made it particularly notable was the speed of execution: attackers ramped from baseline traffic to terabit-scale volumes in under a minute, leaving little time for human intervention. The event underscored the automation now built into modern attack toolkits and the reality that amplification remains a go-to method for adversaries looking to cause maximum disruption with minimum effort.

This historical arc underscores a sobering reality: DNS amplification is not a relic of the past. It has matured into a persistent, evolving threat. Each major incident has taught defenders something new, but it has also emboldened adversaries who know that the basic ingredients – UDP, open resolvers, and asymmetric response sizes, are not going away anytime soon.

The Business Cost

When security teams discuss denial-of-service, the conversation often drifts toward numbers: packets per second, amplification factors, bits per second on the wire. These figures matter, but they obscure what’s really at stake – business continuity. To understand the real impact of DNS amplification attacks, it helps to picture what happens inside an organization the moment one lands.

Imagine an online retailer in the middle of its busiest sales day of the year. Customers across the globe are hammering the site, filling carts, checking out. Then, suddenly, the traffic graphs spike. Except this time it isn’t new customers, it’s an avalanche of DNS responses that the retailer never asked for. Within seconds, the company’s internet connection is saturated. Pages stop loading. Transactions fail mid-payment. Support lines light up with frustrated shoppers who can’t even reach the site. What began as a technical flood of packets has instantly become lost revenue, brand damage, and angry customers tweeting screenshots of errors.

Or take the perspective of a SaaS provider hosting collaboration tools. Its clients expect near-perfect uptime; contracts often include strict service-level agreements. When an amplification campaign overwhelms its DNS resolvers, it doesn’t matter that the core application servers are still up, the clients’ devices simply can’t resolve the domain names to reach them. To end users, the service is “down.” Within hours, customer success teams are inundated with escalations, executives demand explanations, and the operations team scrambles to bring resolvers back online under the weight of terabit-scale floods.

The collateral damage doesn’t stop there. Because DNS queries and responses don’t exist in isolation, amplification traffic often ripples outward. Upstream internet service providers, transit carriers, and even unrelated customers can suffer degraded performance simply because they share the same pipes. In some cases, ISPs have had to temporarily null-route entire blocks of IP addresses just to stay afloat, an emergency measure that can take legitimate services offline alongside the target.

For organizations with a global footprint, downtime in one region can cascade into reputational harm elsewhere. A streaming platform knocked offline in Europe on a Friday evening will find itself trending for the wrong reasons worldwide within minutes. And trust, once shaken, is hard to rebuild.

Then there are the indirect costs. Incident response consumes time and resources that could otherwise go toward innovation. Legal and compliance teams may need to prepare reports if SLAs are violated or regulators get involved. And while amplification attacks rarely exfiltrate data, they can serve as smokescreens for parallel intrusions, further compounding the damage.

The lesson is stark: DNS amplification isn’t just about traffic floods. It is about the fragility of business continuity in an environment where availability is king. A single well-timed attack can undo months of customer trust, chip away at revenue streams, and force organizations into costly recovery efforts. Numbers alone can’t capture that human and economic toll.

Building Resilience Against Amplification

When the first flood hits, the instinct is to throw more capacity at the problem. That can work for a little while, but capacity is a blunt instrument, you can buy more pipes, but you can’t buy an architecture that magically stops spoofed packets or invalid queries from being generated in the first place. Real resilience comes from a mix of proactive hygiene, careful shaping of DNS behavior, and partnerships that let you absorb and deflect attack traffic before it reaches your critical links.

Start with the network edge. The single most effective preventive measure is source-address validation, blocking spoofed packets where they enter the network. The specification known as BCP 38 (ingress filtering) is simple in concept: don’t forward traffic claiming to be from IP ranges that aren’t reachable on that interface. It’s not glamorous work, often requiring router ACLs, careful peering configs, and coordination with transit providers, but when applied broadly it removes the attacker’s ability to forge victim addresses and turns reflection attacks from trivial to far more costly.

Inside the DNS stack itself, configuration choices make a massive difference. Open resolvers – servers configured to answer queries from anyone, are the usual ammunition stockpile for amplifiers. Locking recursion down to known clients, or splitting authoritative and recursive roles so that public-facing resolvers do not perform recursion for arbitrary IPs, dramatically reduces your exposure. Response Rate Limiting (RRL) and response shaping add another layer: instead of answering every identical query at full fat response size, a resolver can throttle repetitive requests or send truncated replies that force follow-up TCP/TLS connections. That behavior both reduces amplification potential and raises the resource cost for attackers.

Architectural choices matter too. Anycast – the practice of announcing the same IP from many geographically distributed points, doesn’t stop an attack, but it changes the problem from “one saturated pipe” to “many smaller pipes,” increasing the chances that traffic can be absorbed or scrubbed before services are affected. Paired with geographically distributed scrubbing (cloud or carrier-based mitigations), anycast turns an otherwise single-point disaster into something operators can manage by shifting traffic dynamically.

Visibility and speed of detection are equally essential. Modern defenses rely on telemetry per-query logs, volumetric metrics, and upstream BGP signals, fed into anomaly detection systems that can spot abnormal query patterns. When a spike is detected, automated playbooks can trigger mitigations: divert traffic to scrubbing centers, apply aggressive RRL policies, or rate-limit particular query types (for example disabling ANY or large DNSSEC answers temporarily). The best teams rehearse these playbooks in advance; improvisation under pressure loses time.

There’s also a practical trade-off defenders must accept: hardening DNS for availability sometimes means trading off ideal DNS behavior. Temporarily disabling EDNS0 with large buffer sizes, pruning DNSSEC responses, or returning TCP-only for specific queries are blunt but effective emergency steps. The goal is availability first, ensure users can resolve names even if the responses are slightly constrained, because a trimmed but working DNS is far better than none at all.

Finally, mitigation is a social problem as much as a technical one. ISPs, IXPs, cloud providers, and downstream customers must coordinate. When a multi-terabit flood appears, successful containment typically involves several parties: the victim’s NOC, upstream transit, DDoS mitigation providers, and sometimes national CERTs or IXPs. Threat intelligence – lists of abused open resolvers, signatures of known botnet behavior, and rapid sharing of indicators shortens detection and reduces collateral damage.

For organizations that prefer to outsource part of this complexity, professionally managed protected DNS services offer baked-in filtering, global anycast presence, and automated traffic shaping that are designed to absorb peak loads. Such services don’t replace good internal hygiene, but they can be an effective part of a layered defense: they provide filtering close to the edge, reduce the blast radius of amplification, and simplify the operational burden during an incident.

Why Amplification Still Matters?

It’s tempting to think of DNS amplification as an “old” attack. After all, the technique has been public for nearly twenty years. Surely, the industry must have solved it by now? The truth is less comforting. The same fundamental weaknesses that made the Spamhaus flood possible in 2013 still exist in 2025: UDP’s lack of source validation, the persistence of open resolvers, and the asymmetric relationship between queries and responses. What has changed is scale. Bandwidth is cheaper, botnets are larger, and attack tools are more automated than ever before.

In that sense, amplification is not a relic, it’s a constant. Whenever adversaries want a blunt, reliable way to take something offline, DNS remains on the shortlist of options. That persistence should be a wake-up call. If a protocol as mature and as widely scrutinized as DNS can still be turned into a weapon with such ease, defenders can’t afford to become complacent.

What amplification teaches us is that resilience isn’t about a single silver bullet. No single appliance, no single policy, and no single provider can guarantee immunity. Instead, resilience is about layers: strong configurations that reduce your own attack surface, capacity and architecture that absorb what inevitably gets through, and partnerships that extend your defensive reach beyond your own perimeter. When those layers are in place, an attack becomes a disruption rather than a catastrophe.

Looking ahead, the stakes will only rise. The continued growth of IoT devices, millions of insecure sensors, cameras, and appliances connecting to the network every day, expands the pool of systems that can be conscripted into botnets. At the same time, businesses are concentrating their operations around fewer DNS providers and cloud platforms, creating tempting single points of failure for attackers. The combination is volatile: larger weapons, fewer shields.

But the future is not all bleak. There is a growing recognition across the industry that DNS security is foundational, not optional. More ISPs are implementing BCP 38 filtering by default. More enterprises are locking down their resolvers and disabling dangerous query types. DDoS mitigation capabilities are now integrated into many cloud services, giving smaller organizations access to defenses that were once available only to the largest carriers.

The reality we face is straightforward: DNS amplification isn’t going away. But with layered defenses, vigilant monitoring, and infrastructures designed with peak loads in mind, organizations can blunt its force. The goal is not perfection, it is continuity. To ensure that even in the face of terabit-scale floods, the service we all take for granted – the ability to resolve a name, remains steady, reliable and invisible to end users. That quiet reliability is, in the end, the true measure of resilience.

Take advantage of the SafeDNS trial period and try all the best features